The 2008 PC Builder's Bible

Find the best parts. Learn to build a rig from scratch and overclock it to kingdom come. PC Gamer shows you how

Warning: If you’re serious about building a game machine, do not skimp on your video card! All the latest graphically-intense games — like Supreme Commander, Unreal Tournament III, and Crysis, just to name a few — look absolutely incredible will the resolution, anti-aliasing, and detail settings cranked up to the max. This is truly the way it was meant to be played.

But you won’t even get close to realizing this gaming dream without investing a serious slice of your budget in a monster video card. There is no sight on Earth sadder to a gamer’s eye than seeing a potentially beautiful game reduced to minimal graphics settings and resolution, and still chugging along with a low frame rate. Don’t let this happen to you.

The graphics card is the single biggest factor (though not the only factor) in determining how fast your computer will be able to run the latest frag fest or grand strategy game. Choosing last year’s card will earn you some pretty chunky frame rates, and that simply won’t do.

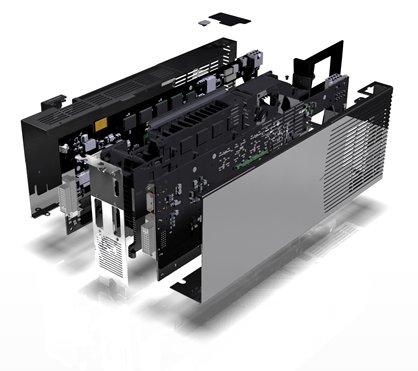

Although there used to be two separate types of videocards — 2D cards for desktop work and 3D cards for games — today’s videocards do everything in one sexy silicon package. And over the years, as games have become increasingly complex and more lifelike, videocard development has accelerated, rapidly bringing Finding Nemo-quality graphics on your desktop closer and closer to reality. While that day is still a ways out, modern videocards are technological wonders that are just as complex (and just as expensive, unfortunately) as some high-end CPUs.

Above: NVIDIA’s 8800GT

The consumer videocard market is currently dominated by just two companies: ATI and NVIDIA. Today, DirectX 10 cards like NVIDIA’s 9800 line pretty much trounces the competition in performance, with tough competition from ATI’s brand-new cards based on their RV670 chip. And with NVIDIA’s SLI or ATI’s Crossfire technology, which allows you to run two high-powered cards in tandem for a huge bump in performance, game graphics are experiencing an unprecedented boost in hardware power.

With that in mind, this may be the most important section of this article. We’ll help you find the right card, starting by answering some frequently asked questions.

Weekly digests, tales from the communities you love, and more

Q: Are onboard graphics really that bad? Are integrated graphics any good? Can they run a game like Assassin’s Creed?

A: Integrated graphics—that is, graphics that are built directly into a motherboard—are designed to provide minimal 3D performance in exchange for reduced cost. They’re not designed for gaming, but rather simple 2D desktop work. As such, anyone serious about gaming should never consider using integrated graphics.

Q: If I buy a top-of-the-line videocard today, how long will it be a viable solution for good gaming?

A: In general, a high-end videocard should be extremely capable for at least a year, and probably longer depending on what kind of frame rates you demand and the kind of high-end features you’d like to be able to enable. There are games out today, for example, that run just fine on 3-year-old cards, but that’s typically because the 3D engine used in those games came out at roughly the same time as the card. Play a brand-new game with a modern engine on the same card, however, and it’ll probably run like a slide show, if at all.

Above: Assassin’s Creed in DirectX 10

While the videocard industry generally relies on a six-month refresh cycle for all of its cards (meaning that you’ll usually see new cards from both ATI and NVIDIA twice each year), the game industry moves at a much slower pace. All 3D games run on their own “engine” — a massive pile of code that, among other things, determines the visual quality of the game you eventually see on your screen. These engines take years to develop, and are as forward-looking as possible, meaning they are designed to run on hardware that won’t even exist until several years down the road! As a result, many brand-new engines/games are brutal on PC hardware when they’re first released. But over time, hardware catches up and eventually surpasses the 3D engine’s capabilities.

A classic example of that is id software’s Quake III. When it was first released several years ago, nothing but the most high-end card could run the game at a constant 30 frames per second. Today, the latest hardware runs that same engine at several hundred frames per second. And a few years from today, new, yet-imagined video cards should churn through Quake 4 and Oblivion in the same way!

The boxes videocards come in are filled with mumbo-jumbo touting often obscure features and wildly out-of-context performance numbers. Here are the key features that really matter.

DirectX 10: The most important thing to know about DX10 is that both AMD and NVIDIA GPUs that support it feature a unified architecture. This means that any or all of the processor’s computational units (aka stream processors) can be dedicated to executing any type of shader instruction, be it vertex, pixel, or geometry. This means DX10 compatibility is a desirable feature even if you don’t plan on running Vista.

Memory Interface: In theory, a GPU with a 512-bit interface to memory will perform faster than one with a 256-bit memory interface. But don’t be confused by AMD’s 512-bit “ring bus” memory. That architecture is 512-bits wide internally, but only its high-end GPUs have a true 512-bit memory interface; the company’s lesser components have only 128- and 256-bit paths to memory. Inside the GPU, AMD’s “ring bus” architecture is 512 bits wide across the board. But don’t judge a card based solely on its memory interface. NVIDIA’s 8800 GTX and 8800 Ultra are considerably faster than AMD’s ATI Radeon HD 2900 XT despite those GPUs having a much narrower 384-bit memory interface.

Stream Processors: Unlike CPUs, which have one to four processing cores on a single die, modern GPUs consist of dozens of computational units known as stream processors. As with the GPU’s memory interface, however, simply counting the number of stream processors doesn’t necessarily indicate that one videocard is more powerful than another. AMD’s ATI Radeon HD 2900 XT, for example, is much slower than NVIDIA’s GeForce 8800 GTX despite the fact that the latter part has only 128 stream processors to the former’s 320.

HDMI: If you purchased a new big-screen TV, it’s probably outfitted with an HDMI port, either instead of or in addition to a DVI port. The big difference is that HDMI is capable of receiving both digital video and digital audio over the same cable. Videocards based on AMD’s new GPUs are capable of taking audio from the motherboard and sending it out through an HDMI adapter that connects to the card’s DVI port. With an NVIDIA card, audio must be routed to your display or A/V receiver over a separate cable.

HDCP: This acronym refers to the copy-protection scheme deployed in commercial Blu-ray and HD DVD movies. In order to transmit the audio and video material on these discs to your display in the digital domain, both the videocard and the display must be outfitted with an HDCP decryption ROM. This copy protection is not currently enforced if the signal is transmitted in the analog domain. (See also Dual-Link DVI)

DUAL-LINK DVI: Driving a 30-inch LCD at its native resolution of 2560x1600 requires a videocard with Dual-Link DVI, which is relatively common in mid-range and high-end products. What’s not so common is a videocard that supports HDCP on Dual-Link DVI; without that feature, the maximum resolution at which you can watch Blu-ray and HD DVD movies is 1280x800.

Above: Note the proprietary VIVO port next to the DVI ports on this NVIDIA 9800GX2 videocard

VIVO: The acronym stands for video in/video out—analog video, that is. Most videocards are capable of producing, in order of quality, composite, S-, or component-video that renders them friendly to analog TVs. Support for these types of video input—which useful primarily for capturing analog video from VCRs and older camcorders—is much less common.

BLU-RAY And HD DVD Support: As backward as it sounds, high-end videocards are less capable than mid-range videocards when it comes to decoding the high-resolution video streams (H.264, VC1, and MPEG-2) recorded on commercial Blu-ray and HD DVD movies. AMD’s ATI Radeon HD 2600 XT and the upcoming RV670 fully offload the decode chores from the host CPU; the ATI Radeon HD 2900 XT do not. On the NVIDIA side, the GeForce 8600 GTS and the 8800 GT do, but the 8800 GTS, 8800 GTX, and 8800 Ultra do not.

SLI and Crossfire analyzed

Head back to the table of contents

PC Gamer is the global authority on PC games and has been covering PC gaming for more than 20 years. The site continue that legacy today with worldwide print editions and around-the-clock news, features, esports coverage, hardware testing, and game reviews on pcgamer.com, as well as the annual PC Gaming Show at E3.