4K gaming: the hardware isn't really ready, so how are developers making it happen? And what are the hidden costs?

Leading developers reveal the tricks they use to run games in 4K on PS4 Pro and Xbox One X – and how that processing power might be better used

Games are currently facing one of the sternest technical challenges they’ve ever had to grapple with. As TV and monitor makers have brought down the price of of 4K displays, games have rushed to meet them pixel for pixel, with recent releases like Far Cry 5 running in native 4k on Xbox One X. Both Sony and Microsoft have launched upgraded consoles, Xbox One X and PS4 Pro, which are specifically marketed to cater to the demands that 4K imposes, but the maths is tricky. 4K is the biggest leap in pixel density that game hardware has ever had to accomplish, because with the way modern graphics processes work, pixels really matter. “The majority of work done in a frame is roughly proportional to the number of shaded pixels,” says graphics engineer Timothy Lottes, a member of GPU maker AMD’s Game Engineering team.

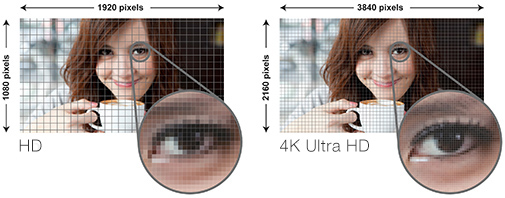

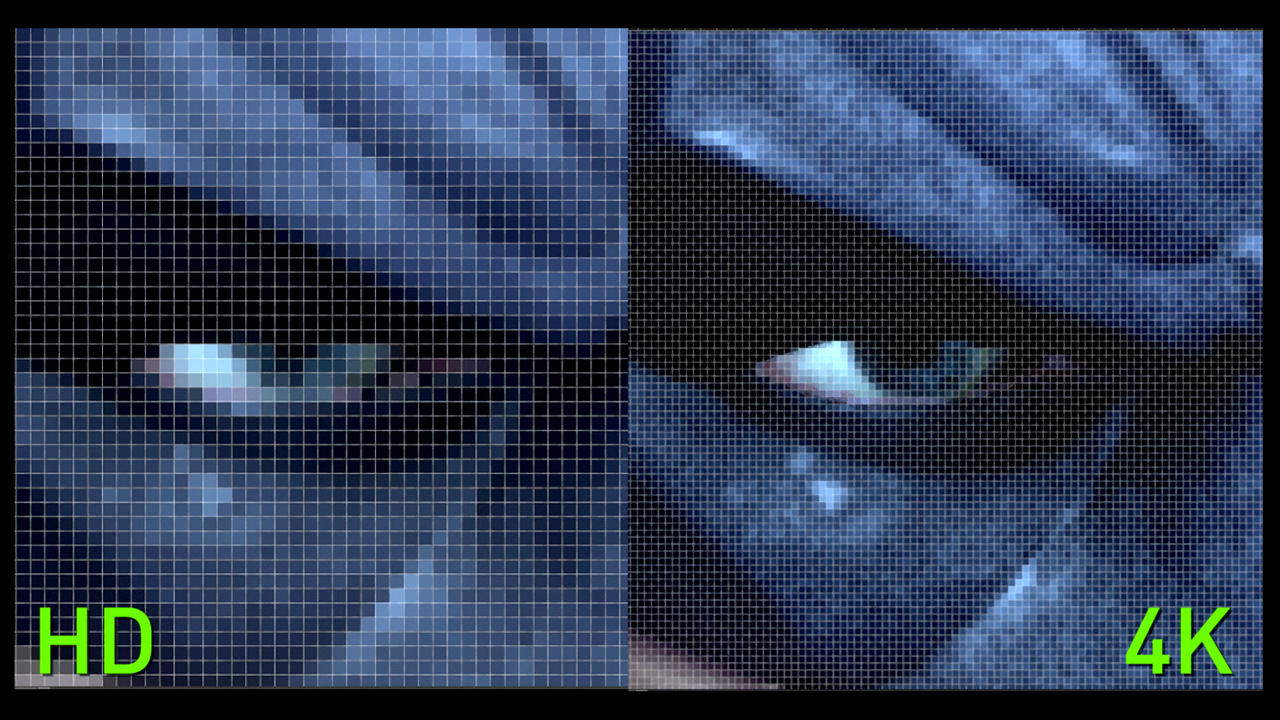

“To oversimplify it,” says Oli Wright, lead graphics team programmer on Codemasters’ forthcoming arcade racer, Onrush, “4K has four times the number of pixels compared to 1080p, but a PS4 Pro does not have four times the power of a PS4”. In other words, consoles have not kept in step with the additional requirements of 4K – not even Xbox One X. Lottes estimates that to achieve roughly the same kind of visual quality as a PS4 game at a rate of 30 frames per second, 4K requires around 7.4 Teraflops (floating point calculations) per second. PS4 Pro checks in at 4.2 Tflops per second, and Xbox One X at 6 Tflops per second. As Lottes mentions, his methodology is a great oversimplification of a complex situation which doesn’t take into account such factors as the memory bandwidth of each machine, but it does give an idea of how prepared the current generation is for 4K.

How developers overcome 4K's technical hurdles

Developers must therefore, use a number of tricks to achieve 4K output while also reaching the same level of visual detail that their games can achieve in 1080p. Onrush, for example, only renders half of 4K’s pixels, thereby only doubling the additional work from outputting at 1080p rather than quadrupling it. “We then use a temporal reconstruction technique to provide a unified antialiasing and upscaling solution for 4K that does a really good job of creating all the extra pixels,” Wright says. Temporal reconstruction looks back at previous frames to make guesses about how to fill in the new one; another similar solution is checkerboard rendering, which renders alternating pixels to achieve much the same effect but with different pros and cons. “We’ve not had to deal with a four- times jump in pixel count before,” Wright continues. “It would be a different proposition if it weren’t for techniques like checkerboard rendering and temporal reconstruction. Those techniques allow us to pretend we’re still dealing with a two-times jump".

"At Sumo we have not made any sacrifices to the gameplay to support 4K rendering"

Ash Bennet, Studio Technical Director

Not all developers are having to use these tricks. Sumo Digital, for example, which is currently working on the 4K-supporting Crackdown 3, which will support 4K, has looked into checkerboard rendering but so far hasn’t needed to implement it, enabling Microsoft to market Crackdown 3 as ’true 4K’. “So far, the usual graphics optimisations have been sufficient,” says studio technical director Ash Bennett. “We’ll always support 4K mode, if we’re intending on targeting it, from the earliest point in the production cycle. This makes things easier from a cost and workflow perspective as opposed to trying to retrofit support at the end.” For Sumo, thinking about 4K early is a good way of managing its demands.

Over the past few months accusations have circled that suggest developers are overly focusing system resources on graphics and away from other aspects of their games, such as physics, AI and other elements of general world simulation. One popular video pointed out differences between Far Cry 2 and Far Cry 5, such as the dynamically growing trees and greater conflagrations in the 2008 game, which was widely interpreted as suggesting that Far Cry 5’s focus on visual fidelity had limited, or even made impossible, these features. Certainly, Lottes believes that the sheer jump between 1080p and 4K must be leading to some kinds of tradeoff in visual quality. But Bennett is firm about its real effects on the player experience. “At Sumo we have not made any sacrifices to the gameplay to support 4K rendering,” he says. “It is the CPU that tends to drive the game simulation, and supporting 4K mode does not take any more of this resource than it did previously.”

Do 4K visuals require games to be compromised?

For Alex Perkins, art director on Onrush, 4K is just another factor in the

list of cost and benefits to the player that he typically thinks about. “If we wrote

an incredible grass rendering technique, but it used 50 per cent of the available resources, then I would not consider that to be good value. Unless, that is, we were creating Grass Simulator 2018.” For him, Onrush’s temporal reconstruction technique costs 10 per cent of its overall frame budget and makes a high-definition and antialiased image that benefits players. “It hasn’t affected other aspects of the game because I can’t imagine spending that 10 per cent on anything that would give better value to the player.”

To go over the numbers, 4K’s native resolution of 3840x 2160 pixels gives the screen a total of 8,294,400 pixels. This is four times the number of pixels that a Full HD, 1920×1080 pixel screen has. By comparison, the difference in pixels from the 1280×720 screen of the PS3/Xbox 360 era to 1080p was only a 2.25x leap, and that between the 640×480 screen of the PlayStation 2/Xbox/ GameCube era to 720p was three times. In what’s technically just a jump of two console generations, gaming’s pixel count has risen by 27 times, from 307,200 to 8,294,400. But then again, perhaps it’s unfair to truly consider PS4 Pro and Xbox One X members of the same generation as their forebears.

It won’t come as a surprise that Crackdown 3’s 4K mode will run at 30 frames per second, even on the Xbox One X. Well, we say ‘even’. Lottes’ simplified rule-of-thumb performance bar for outputting 60 frames a second at 4K stands at 14.7 Tflops per second, which is over twice what Xbox One X is capable of. Indeed, it’s comfortably over what the current PC GPU leaders are capable of, too. Nvidia’s Geforce 1080ti runs at 11.5 Tflops per second and AMD’s Vega 64 runs at 13.7 Tflops per second.

Weekly digests, tales from the communities you love, and more

These distinctions raise an important question for whether 4K should have the prominence it has as a new step in games’ ongoing technical development. Listen to Microsoft or Sony, Nvidia or AMD and you’d be forgiven for assuming that 4K is the only way games should go. From blast processing (a phrase that its creator later labelled as 'ghastly') to PS2's Emotion Engine, the history of videogames is littered with promises about the transformative powers of technology. For a medium that’s defined by the silicon which brings it to life, it’s inevitable that games should always be presented as being on the cusp of sublimity, just a generation away from what we all dream they can be.

They can always run that little bit faster, look that little bit sharper, be that bit more richly simulated. The history of games is a history of constant obsolescence, and that’s okay. Games are generally better for better technology. But it certainly can make it hard to tell what a genuine technological advance is, one which will come to help define what games will be in the future, or when it’s just a matter of taste – or even just something that’s being foisted on games. In other words, are more pixels really better?

Does 'perfecting' pixels beat adding more of them?

For Lottes, a high framerate is more important than resolution because it favours fluid motion and faster input response times, and he’s comfortable with the cost of lower resolution. In terms of pure numbers, though, he points out that targeting native 4K at 30 frames per second is equal in the rate of rendered pixels to targeting native 1080p at 120 frames per second. He acknowledges that this calculation is entirely theoretical, and doesn’t take into account situations in which a game’s speed might be limited by its CPU once it’s not bound by its GPU any more, but the prospect of being able to switch preferences between such starkly different standards for resolution versus framerate is tantalising. “This is why any news on console support for 120Hz is exciting to me,” Lottes says.

But he goes further: “I think it would be an interesting world if we stuck at 720p and then just kept on scaling performance. Then developers would have more opportunity to use the GPU for more than just filling more pixels.” On one hand, that excess GPU power could be put into perfecting every pixel to better close the distance to CG movie visuals, employing high-quality antialiasing and lighting effects such as the realtime ray- tracing showcased by Microsoft and Unreal at GDC this year. Or what about forgetting all that and putting it into world simulation to vastly expand the number of AI-controlled actors, physics and other complementary systems that go into producing dynamic and interactive places in which to play?

One of the biggest challenges that Codemasters has encountered in supporting 4K hasn’t been about processing power, but making art assets that look as good on less powerful hardware as they do in 4K. “For Xbox One X we’re using 4K by 4K textures,” says Onrush art director Alex Perkins. “It meant we had to work out a priority system for maintaining the detail on smaller memory formats, so that the lowest resolution versions of the textures look comparable without eliminating too much detail, to avoid the need for separate assets per platform.” 4K presents its own issues, but as a multi-platform developer supporting PS4 and Xbox One, as well as PS4 Pro and Xbox One X, not to mention PC, its high resolutions only add to the workload.

And that’s just in the realms of current- day system resources and display technology. What about more speculative concepts, such as dynamically changing resolution depending on where a player is looking, raising it where the eye is directly facing to feed the small central area that the eye resolves the finest detail with lots of detail while reducing it in the periphery, which is far better at perceiving motion? Is it really worthwhile to throw equal resources to every part of the screen when players’ attention is so focused on the middle? In many ways, visual technology for games today goes for crudely brute-force approach.

But as games have proven time and time again throughout their history, marketing leads everything. Improved visuals will always sell a game better than a difficult-to-visualise, more richly simulated world, or a framerate that YouTube can’t support. And that effect is doubled when 4K TVs are increasingly ubiquitous. If you’ve just bought one, you’ll want to take the best advantage of it. None of this is to deny that 4K can be stunning to behold and play. “[4K is] important to us because it makes a difference,” says Codemasters’ Wright. “You could certainly argue that when watching a movie at a typical viewing distance, 4K offers very little over 2K. I can’t speak for everybody, but I know every gamer in my house sits a lot closer to the TV when gaming. 4K is definitely better for games.”

4K is here to stay and developers have the tools to reach its demands, even if the hardware is technically lacking. But to think it’s the only advance gaming can make would be to forget that the power of today’s consoles and PCs can do so much more than simply throw millions of pixels at the screen. The quality of them matters, too.

This article is taken from Edge Magazine issue 320 and you can subscribe to a print and digital bundle for as little as £17 a quarter.